Oracle 23c has a very interesting feature: Lock Free Reservations. There are great posts already which explain this feature, I'm more interested on the activities behind. For this post, please first read this article. My tests are following it's structure.

In addition to the SQLs I also enabled SQL_TRACE.

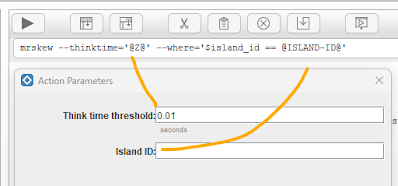

But as the raw trace isn't easy to read at all, I processed them with

mrskew - to be specific with a slightly modified calls.rc to get the sequence and dependency of SQLs. A specific SQL can generate some other calls in the background - this is indicated by the

dep=x entries in the tracefile - in the parsed output it's shown as indent of the statement.

I will reduce the content to a minimum to keep the post readable - the full tracefiles and parsed output can be found

here.

Back to Lock Free Reservations - following Lucas' method:

0 - CREATE TABLE

14:49:39 SESSION_A > CREATE TABLE TICKETSALES(

2 ID NUMBER PRIMARY KEY,

3 NAME VARCHAR2(100),

4 CAPACITY NUMBER RESERVABLE CONSTRAINT MINIMUM_CAPACITY CHECK (CAPACITY >= 10)

5* );

Table TICKETSALES created.

14:49:39 SESSION_A >

this simple statement leads to a LOT of activities:

END-TIMe LINE PARSE-ID EXEC-ID CALL-NAME(BOUND-VALUES) STATEMENT-TEXT

-----------------------------------------------------------------------------------------------------------------------------------------------------

2023-10-26T14:49:39.434617 309 309 0 PARSE CREATE TABLE TICKETSALES( ID NUMBER PRIMARY KE

2023-10-26T14:49:39.435165 327 164 327 · EXEC(1,"TICKETSALES",1) · select obj#,type#,ctime,mtime,stime, status,

2023-10-26T14:49:39.435714 342 334 342 · EXEC(98174) · select o.owner#,o.name,o.namespace,o.remoteo

2023-10-26T14:49:39.435971 352 351 352 · EXEC · select obj# from objerror$

2023-10-26T14:49:39.440226 376 362 376 · EXEC("TICKETSALES","BERX") · select obj#, owner, node from syn$ where nam

2023-10-26T14:49:39.444092 395 385 395 · EXEC("TICKETSALES") · select user#,password,datats#,tempts#,type#,

2023-10-26T14:49:39.450749 543 404 543 · EXEC(131,"TICKETSALES",1,98174,2,"10/2 · insert into obj$(owner#,name,namespace,obj#,

2023-10-26T14:49:39.452329 552 550 552 · · EXEC · · select 1 from dba_tables where owner = 'SY

2023-10-26T14:49:39.455737 629 621 629 · · EXEC("BERX") · · select 1 from sys.bc$ bc, sys.user$ ur whe

2023-10-26T14:49:39.456191 637 636 637 · · EXEC · · select 1 from dba_tables where owner = 'SY

2023-10-26T14:49:39.456597 648 640 648 · · EXEC("BERX") · · select 1 from sys.bc$ bc, sys.user$ ur whe

2023-10-26T14:49:39.456992 655 655 0 · PARSE · create table BERX.SYS_RESERVJRNL_98174 (ORA_

2023-10-26T14:49:39.457295 673 164 673 · · EXEC(131,"SYS_RESERVJRNL_98174",1) · · select obj#,type#,ctime,mtime,stime, statu

2023-10-26T14:49:39.457786 693 164 693 · · EXEC(1,"SYS_RESERVJRNL_98174",1) · · select obj#,type#,ctime,mtime,stime, statu

2023-10-26T14:49:39.466683 832 700 832 · · EXEC(1,0,"3/26/2023 2:26:24","10/26/ · · update obj$ set obj#=:4, type#=:5,ctime=:6

2023-10-26T14:49:39.467141 843 334 843 · · EXEC(98175) · · select o.owner#,o.name,o.namespace,o.remot

2023-10-26T14:49:39.467702 851 850 851 · · EXEC · · select obj# from objerror$

2023-10-26T14:49:39.469494 872 859 872 · · EXEC("SYS_RESERVJRNL_98174","BERX") · · select obj#, owner, node from syn$ where n

2023-10-26T14:49:39.469915 884 385 884 · · EXEC("SYS_RESERVJRNL_98174") · · select user#,password,datats#,tempts#,type

2023-10-26T14:49:39.471682 1025 404 1025 · · EXEC(131,"SYS_RESERVJRNL_98174",1,98 · · insert into obj$(owner#,name,namespace,obj

2023-10-26T14:49:39.474984 1039 1031 1039 · · · EXEC(81) · · · select annotation_name, annotation_value

2023-10-26T14:49:39.477871 1056 1048 1056 · · · EXEC(81) · · · select con#,obj#,rcon#,enabled,nvl(defer

2023-10-26T14:49:39.482689 1073 1065 1073 · · · EXEC(81) · · · select con#,type#,condlength,intcols,rob

2023-10-26T14:49:39.482904 1087 1079 1087 · · · EXEC(188) · · · select intcol#,nvl(pos#,0),col#,nvl(spar

2023-10-26T14:49:39.483590 1111 1101 1111 · · · EXEC(81) · · · select col#, grantee#, privilege#,max(mo

2023-10-26T14:49:39.483848 1129 1121 1129 · · · EXEC(81) · · · select grantee#,privilege#,nvl(col#,0),m

2023-10-26T14:49:39.485398 1249 1139 1249 · · EXEC(98175,,,,2,2,,,,,,,,,,,,16392,, · · insert into deferred_stg$ (obj#, pctfree_s

2023-10-26T14:49:39.487987 1269 1256 1269 · · EXEC(131,"SYS_C008243") · · select con#,spare1 from con$ where owner#=

2023-10-26T14:49:39.490429 1301 1278 1301 · · EXEC(131,"SYS_C008243",8243,0) · · insert into con$(owner#,name,con#,spare1)v

2023-10-26T14:49:39.492926 1316 1308 1316 · · EXEC(8243) · · delete from con$recycle where con#=:1

2023-10-26T14:49:39.498339 1515 1324 1515 · · EXEC(98175,6,0,0,0,,0,,7,7,0,,"----- · · insert into tab$(obj#,ts#,file#,block#,bob

2023-10-26T14:49:39.504496 1710 1522 1710 · · EXEC(98175,"ORA_SAGA_ID$",1,1,23,16, · · insert into col$(obj#,name,intcol#,segcol#

2023-10-26T14:49:39.506437 1898 1522 1898 · · EXEC(98175,"ORA_TXN_ID$",2,2,23,8,,0 · · insert into col$(obj#,name,intcol#,segcol#

2023-10-26T14:49:39.509384 2085 1522 2085 · · EXEC(98175,"ORA_STATUS$",3,3,96,12,, · · insert into col$(obj#,name,intcol#,segcol#

2023-10-26T14:49:39.512042 2272 1522 2272 · · EXEC(98175,"ORA_STMT_TYPE$",4,4,96,1 · · insert into col$(obj#,name,intcol#,segcol#

2023-10-26T14:49:39.513666 2459 1522 2459 · · EXEC(98175,"ID",5,5,2,22,,0,,,,,-127 · · insert into col$(obj#,name,intcol#,segcol#

2023-10-26T14:49:39.516135 2646 1522 2646 · · EXEC(98175,"CAPACITY_OP",6,6,96,7,,0 · · insert into col$(obj#,name,intcol#,segcol#

2023-10-26T14:49:39.516954 2833 1522 2833 · · EXEC(98175,"CAPACITY_RESERVED",7,7,2 · · insert into col$(obj#,name,intcol#,segcol#

2023-10-26T14:49:39.520255 2874 2839 2874 · · EXEC(8243,98175,5,0,,5,0) · · insert into ccol$(con#,obj#,intcol#,pos#,c

2023-10-26T14:49:39.523326 2985 2881 2985 · · EXEC(98175,8243,7,1,,16,,""ID" IS NO · · insert into cdef$(obj#,con#,type#,intcols,

2023-10-26T14:49:39.523695 2989 2988 2989 · · EXEC · · select 1 from dba_tables where owner = 'SY

2023-10-26T14:49:39.524253 3000 2992 3000 · · EXEC("BERX") · · select 1 from sys.bc$ bc, sys.user$ ur whe

2023-10-26T14:49:39.532592 3033 3007 3033 · · EXEC(76530) · · select owner#,name,namespace,remoteowner,l

2023-10-26T14:49:39.536179 3060 3052 3060 · · EXEC(76530) · · select order#,columns,types from access$ w

2023-10-26T14:49:39.536500 3078 3070 3078 · · EXEC(76530) · · select actionsize from trigger$ where obj#

2023-10-26T14:49:39.536800 3095 3087 3095 · · EXEC(76530) · · select action# from trigger$ where obj# =

2023-10-26T14:49:39.537322 3112 3104 3112 · · EXEC(76530) · · select baseobject,type#,update$,insert$,de

2023-10-26T14:49:39.538067 3169 3121 3169 · · EXEC(76530,0,0,0,0,0,76530,1001,7653 · · select tc.type#,tc.intcol#,tc.position#,c.

2023-10-26T14:49:39.539134 3199 3191 3199 · · EXEC(76530) · · select case when (bitand(u.spare1, 16) = 0

2023-10-26T14:49:39.539757 3208 655 3208 · EXEC · create table BERX.SYS_RESERVJRNL_98174 (ORA_

2023-10-26T14:49:39.541061 3330 1256 3330 · EXEC(131,"SYS_C008244") · select con#,spare1 from con$ where owner#=:1

2023-10-26T14:49:39.541381 3355 1278 3355 · EXEC(131,"SYS_C008244",8244,0) · insert into con$(owner#,name,con#,spare1)val

2023-10-26T14:49:39.541466 3364 1308 3364 · EXEC(8244) · delete from con$recycle where con#=:1

2023-10-26T14:49:39.541624 3378 1256 3378 · EXEC(131,"MINIMUM_CAPACITY") · select con#,spare1 from con$ where owner#=:1

2023-10-26T14:49:39.541755 3393 1256 3393 · EXEC(0,"_NEXT_CONSTRAINT") · select con#,spare1 from con$ where owner#=:1

2023-10-26T14:49:39.546000 3423 3400 3423 · EXEC(8250,0,0,"_NEXT_CONSTRAINT") · update con$ set con#=:3,spare1=:4 where owne

2023-10-26T14:49:39.546560 3449 1278 3449 · EXEC(131,"MINIMUM_CAPACITY",8245,0) · insert into con$(owner#,name,con#,spare1)val

2023-10-26T14:49:39.547694 3458 1308 3458 · EXEC(8245) · delete from con$recycle where con#=:1

2023-10-26T14:49:39.548030 3472 1256 3472 · EXEC(131,"SYS_C008246") · select con#,spare1 from con$ where owner#=:1

2023-10-26T14:49:39.548358 3497 1278 3497 · EXEC(131,"SYS_C008246",8246,0) · insert into con$(owner#,name,con#,spare1)val

2023-10-26T14:49:39.548454 3506 1308 3506 · EXEC(8246) · delete from con$recycle where con#=:1

2023-10-26T14:49:39.550422 3520 3512 3520 · · EXEC(98174) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:49:39.551848 3542 3529 3542 · · EXEC(98174,1) · · select /*+ rule */ bucket_cnt, row_cnt, ca

2023-10-26T14:49:39.552463 3551 3551 0 · PARSE · CREATE UNIQUE INDEX "BERX"."SYS_C008246" on

2023-10-26T14:49:39.553196 3576 3558 3576 · · EXEC("BERX","TICKETSALES","PUBLISH") · · select p.valchar from sys.optstat_user_pre

2023-10-26T14:49:39.554124 3598 3590 3598 · · EXEC("PUBLISH") · · select /*+ no_parallel */ spare4 from sys.

2023-10-26T14:49:39.554385 3618 164 3618 · · EXEC(131,"SYS_C008246",4) · · select obj#,type#,ctime,mtime,stime, statu

2023-10-26T14:49:39.554526 3628 334 3628 · · EXEC(98176) · · select o.owner#,o.name,o.namespace,o.remot

2023-10-26T14:49:39.554625 3632 3631 3632 · · EXEC · · select obj# from objerror$

2023-10-26T14:49:39.554806 3642 385 3642 · · EXEC("SYS_C008246") · · select user#,password,datats#,tempts#,type

2023-10-26T14:49:39.556352 3783 404 3783 · · EXEC(131,"SYS_C008246",4,98176,1,"10 · · insert into obj$(owner#,name,namespace,obj

2023-10-26T14:49:39.557560 3912 700 3912 · · EXEC(98175,2,"10/26/2023 14:49:39"," · · update obj$ set obj#=:4, type#=:5,ctime=:6

2023-10-26T14:49:39.558893 4026 1139 4026 · · EXEC(98176,10,,,,,,,,,,,2,255,,,,0,, · · insert into deferred_stg$ (obj#, pctfree_s

2023-10-26T14:49:39.559206 4049 4031 4049 · · EXEC("BERX","TICKETSALES","PUBLISH") · · select p.valchar from sys.optstat_user_pre

2023-10-26T14:49:39.559370 4059 4051 4059 · · EXEC("PUBLISH") · · select /*+ no_parallel */ spare4 from sys.

2023-10-26T14:49:39.563126 4074 4066 4074 · · EXEC(98174) · · select parttype, partcnt, partkeycols, fla

2023-10-26T14:49:39.563253 4079 3551 4079 · EXEC · CREATE UNIQUE INDEX "BERX"."SYS_C008246" on

2023-10-26T14:49:39.566973 4133 4088 4131 · CLOSE(98176,98174,1,1,1,0,0,0) · insert into icol$(obj#,bo#,intcol#,pos#,segc

2023-10-26T14:49:39.571249 4317 4138 4317 · EXEC(98174,98176,6,0,0,1,1,67108864,40 · insert into ind$(bo#,obj#,ts#,file#,block#,i

2023-10-26T14:49:39.572322 4510 1324 4510 · EXEC(98174,6,0,0,0,,0,,3,3,0,,"------- · insert into tab$(obj#,ts#,file#,block#,bobj#

2023-10-26T14:49:39.573326 4697 1522 4697 · EXEC(98174,"ID",1,1,2,22,,0,,,,,-127,, · insert into col$(obj#,name,intcol#,segcol#,t

2023-10-26T14:49:39.575378 4884 1522 4884 · EXEC(98174,"NAME",2,2,1,100,,0,,,,,0,, · insert into col$(obj#,name,intcol#,segcol#,t

2023-10-26T14:49:39.576473 5071 1522 5071 · EXEC(98174,"CAPACITY",3,3,2,22,,0,,,,, · insert into col$(obj#,name,intcol#,segcol#,t

2023-10-26T14:49:39.577298 5107 2839 5107 · EXEC(8244,98174,3,0,,3,0) · insert into ccol$(con#,obj#,intcol#,pos#,col

2023-10-26T14:49:39.578361 5212 2881 5212 · EXEC(98174,8244,7,1,,22,,""CAPACITY" I · insert into cdef$(obj#,con#,type#,intcols,co

2023-10-26T14:49:39.578730 5248 2839 5248 · EXEC(8245,98174,3,0,,3,0) · insert into ccol$(con#,obj#,intcol#,pos#,col

2023-10-26T14:49:39.580397 5353 2881 5353 · EXEC(98174,8245,1,1,,14,,"CAPACITY >= · insert into cdef$(obj#,con#,type#,intcols,co

2023-10-26T14:49:39.580609 5389 2839 5389 · EXEC(8246,98174,1,1,,1,0) · insert into ccol$(con#,obj#,intcol#,pos#,col

2023-10-26T14:49:39.580989 5493 2881 5493 · EXEC(98174,8246,2,1,,0,,,0,,0,,0,,0,,9 · insert into cdef$(obj#,con#,type#,intcols,co

2023-10-26T14:49:39.581629 5496 5495 5496 · EXEC · select 1 from dba_tables where owner = 'SYS'

2023-10-26T14:49:39.582183 5507 5499 5507 · EXEC("BERX") · select 1 from sys.bc$ bc, sys.user$ ur where

2023-10-26T14:49:39.582290 5510 0 0 XCTEND #():C:\Users\berx\Downloads\FREE_ora_53177_SES

2023-10-26T14:49:39.583916 5638 700 5638 · EXEC(98174,2,"10/26/2023 14:49:39","10 · update obj$ set obj#=:4, type#=:5,ctime=:6,m

2023-10-26T14:49:39.584434 5640 309 5640 EXEC CREATE TABLE TICKETSALES( ID NUMBER PRIMARY KE

.

In line 309 the parse of CREATE TABLE statement began. After some checks, in line 6565 the CREATE TABLE for BERX.SYS_RESERVJRNL_98174 is parsed - and executed in line 3208. The UNIQUE INDEX SYS_C008246 is parsed at line 3551 and executed in 4079. All these activities are completed by a COMMIT (XCTEND) in line 5510 and the finish of CREATE TABLE in line 5640.

A lot of work to be done for a simple CREATE TABLE.

1 - INSERT

14:49:40 SESSION_A > insert into ticketsales values (

2 1

3 , 'Faster'

4 , 2000

5* );

1 row inserted.

14:49:40 SESSION_A >

END-TIMe LINE PARSE-ID EXEC-ID CALL-NAME(BOUND-VALUES) STATEMENT-TEXT

-----------------------------------------------------------------------------------------------------------------------------------------------------

2023-10-26T14:49:39.959350 5750 5750 0 PARSE insert into ticketsales values ( 1 , 'Faster'

2023-10-26T14:49:39.959587 5758 3512 5758 · EXEC(98174) · select pctfree_stg, pctused_stg, size_stg,in

2023-10-26T14:49:39.961213 5773 5765 5773 · EXEC(98174) · delete from deferred_stg$ where obj# = :1

2023-10-26T14:49:39.961868 5800 5782 5800 · EXEC(6,15,202) · select type#,blocks,extents,minexts,maxexts,

2023-10-26T14:49:39.964075 5909 5809 5909 · EXEC(15,202,3,6,8,1,1,2147483645,128,0 · insert into seg$ (file#,block#,type#,ts#,blo

2023-10-26T14:49:39.964592 5924 5916 5924 · EXEC(98176) · select indmethod# from ind$ where obj#=:1

2023-10-26T14:49:39.964835 5936 3512 5936 · EXEC(98176) · select pctfree_stg, pctused_stg, size_stg,in

2023-10-26T14:49:39.965039 5946 5765 5946 · EXEC(98176) · delete from deferred_stg$ where obj# = :1

2023-10-26T14:49:39.965318 5965 5782 5965 · EXEC(6,15,210) · select type#,blocks,extents,minexts,maxexts,

2023-10-26T14:49:39.966085 6067 5809 6067 · EXEC(15,210,3,6,8,1,1,2147483645,128,0 · insert into seg$ (file#,block#,type#,ts#,blo

2023-10-26T14:49:39.966481 6081 6073 6081 · EXEC(98174) · delete from superobj$ where subobj# = :1

2023-10-26T14:49:39.967966 6097 6089 6097 · EXEC(98174) · select /*+ index(ts) */ spare1 from sys.tab_

2023-10-26T14:49:39.968547 6109 1048 6109 · · EXEC(73) · · select con#,obj#,rcon#,enabled,nvl(defer,0

2023-10-26T14:49:39.968699 6119 1065 6119 · · EXEC(73) · · select con#,type#,condlength,intcols,robj#

2023-10-26T14:49:39.968823 6128 1079 6128 · · EXEC(173) · · select intcol#,nvl(pos#,0),col#,nvl(spare1

2023-10-26T14:49:39.971061 6141 1101 6141 · · EXEC(73) · · select col#, grantee#, privilege#,max(mod(

2023-10-26T14:49:39.971203 6151 1121 6151 · · EXEC(73) · · select grantee#,privilege#,nvl(col#,0),max

2023-10-26T14:49:39.972250 6166 6158 6166 · EXEC(98174) · delete from tab_stats$ where obj#=:1

2023-10-26T14:49:39.976431 6380 6174 6380 · EXEC(6,15,202,0,,0,,3,3,0,,"---------- · update tab$ set ts#=:2,file#=:3,block#=:4,bo

2023-10-26T14:49:39.976978 6392 1048 6392 · · EXEC(75) · · select con#,obj#,rcon#,enabled,nvl(defer,0

2023-10-26T14:49:39.977121 6402 1065 6402 · · EXEC(75) · · select con#,type#,condlength,intcols,robj#

2023-10-26T14:49:39.977231 6411 1079 6411 · · EXEC(174) · · select intcol#,nvl(pos#,0),col#,nvl(spare1

2023-10-26T14:49:39.977703 6424 1101 6424 · · EXEC(75) · · select col#, grantee#, privilege#,max(mod(

2023-10-26T14:49:39.977851 6434 1121 6434 · · EXEC(75) · · select grantee#,privilege#,nvl(col#,0),max

2023-10-26T14:49:39.979098 6449 6441 6449 · EXEC(98176) · delete from ind_stats$ where obj#=:1

2023-10-26T14:49:39.982440 6626 6457 6626 · EXEC(6,15,210,1,1,0,4097,10,2,255,,,,, · update ind$ set ts#=:2,file#=:3,block#=:4,in

2023-10-26T14:49:39.985174 6738 6634 6738 · EXEC(5,8,1,1,2147483645,128,0,131,8,0, · update seg$ set type#=:4,blocks=:5,extents=:

2023-10-26T14:49:39.985908 6846 6634 6846 · EXEC(6,8,1,1,2147483645,128,0,131,8,0, · update seg$ set type#=:4,blocks=:5,extents=:

2023-10-26T14:49:39.987619 6856 6848 6856 · EXEC(00008151.001C.0001) · select condition from cdef$ where rowid=:1

2023-10-26T14:49:39.993765 6859 5750 6859 EXEC insert into ticketsales values ( 1 , 'Faster'

2023-10-26T14:49:40.392046 6866 5750 6859 CLOSE insert into ticketsales values ( 1 , 'Faster'

Less work, still some background activities (you can see deferred segment creation at work, have a close look!)

2 - COMMIT

14:49:40 SESSION_A > commit;

Commit complete.

14:49:40 SESSION_A >

2023-10-26T14:49:40.393714 6871 6871 0 PARSE commit

2023-10-26T14:49:40.393792 6872 0 0 XCTEND #():C:\Users\berx\Downloads\FREE_ora_53177_SES

2023-10-26T14:49:40.394001 6873 6871 6873 EXEC commit

2023-10-26T14:50:15.673448 6879 6871 6873 CLOSE commit

COMMITs are quite boring - at least in tracefiles.

3 - UPDATE in Session A

14:50:27 SESSION_A > update ticketsales set capacity = capacity-200 where id = 1;

1 row updated.

14:50:46 SESSION_A >

14:50:46 SESSION_A > select xid from v$transaction where addr=(select taddr from v$session where sid= SYS_CONTEXT ('USERENV', 'SID') ) ;

XID

___________________

070015001B050000

14:50:52 SESSION_A > select * from SYS_RESERVJRNL_98174;

ORA_SAGA_ID$ ORA_TXN_ID$ ORA_STATUS$ ORA_STMT_TYPE$ ID CAPACITY_OP CAPACITY_RESERVED

_______________ ___________________ _______________ ___________________ _____ ______________ ____________________

070015001B050000 ACTIVE UPDATE 1 - 200

14:50:54 SESSION_A >

2023-10-26T14:50:46.208878 7127 7127 0 PARSE update ticketsales set capacity = capacity-200

2023-10-26T14:50:46.209519 7135 3512 7135 · · EXEC(98175) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:50:46.209750 7145 3512 7145 · · EXEC(98175) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:50:46.210172 7152 7152 0 · PARSE · SELECT NVL(((select NVL(sum(CAPACITY_RESERVE

2023-10-26T14:50:46.210540 7160 3512 7160 · · EXEC(98175) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:50:46.210649 7170 3512 7170 · · EXEC(98175) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:50:46.212027 7180 3512 7180 · · · EXEC(98175) · · · select pctfree_stg, pctused_stg, size_st

2023-10-26T14:50:46.212371 7187 7187 0 · · PARSE · · SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGN

2023-10-26T14:50:46.212629 7195 3512 7195 · · · EXEC(98175) · · · select pctfree_stg, pctused_stg, size_st

2023-10-26T14:50:46.213665 7210 7187 7210 · · EXEC(070015001B0500,1) · · SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGN

2023-10-26T14:50:46.214495 7223 3512 7223 · · · EXEC(98175) · · · select pctfree_stg, pctused_stg, size_st

2023-10-26T14:50:46.214884 7230 7230 0 · · PARSE · · SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGN

2023-10-26T14:50:46.215153 7238 3512 7238 · · · EXEC(98175) · · · select pctfree_stg, pctused_stg, size_st

2023-10-26T14:50:46.216372 7248 7230 7248 · · EXEC(1) · · SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGN

2023-10-26T14:50:46.217385 7268 7152 7268 · EXEC(070015001B0500,1,) · SELECT NVL(((select NVL(sum(CAPACITY_RESERVE

2023-10-26T14:50:46.218076 7282 3512 7282 · · EXEC(98175) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:50:46.262524 7307 7289 7307 · · EXEC("SYS_RESERVJRNL_98174",131,2) · · select decode(u.type#, 2, u.ext_username,

2023-10-26T14:50:46.263063 7330 7330 0 · PARSE · INSERT INTO "SYS_RESERVJRNL_98174" (ORA_SAGA

2023-10-26T14:50:46.263423 7338 3512 7338 · · EXEC(98175) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:50:46.264027 7348 3512 7348 · · EXEC(98175) · · select pctfree_stg, pctused_stg, size_stg,

2023-10-26T14:50:46.264234 7358 5765 7358 · · EXEC(98175) · · delete from deferred_stg$ where obj# = :1

2023-10-26T14:50:46.264475 7377 5782 7377 · · EXEC(6,15,218) · · select type#,blocks,extents,minexts,maxext

2023-10-26T14:50:46.265060 7479 5809 7479 · · EXEC(15,218,3,6,8,1,1,2147483645,2,0 · · insert into seg$ (file#,block#,type#,ts#,b

2023-10-26T14:50:46.265305 7493 7485 7493 · · EXEC(98175) · · delete from superobj$ where subobj# = :1

2023-10-26T14:50:46.265439 7504 6089 7504 · · EXEC(98175) · · select /*+ index(ts) */ spare1 from sys.ta

2023-10-26T14:50:46.267489 7519 7511 7519 · · EXEC(98175) · · delete from tab_stats$ where obj#=:1

2023-10-26T14:50:46.268302 7728 6174 7728 · · EXEC(6,15,218,0,,0,,7,7,0,,"-------- · · update tab$ set ts#=:2,file#=:3,block#=:4,

2023-10-26T14:50:46.268724 7833 6634 7833 · · EXEC(5,8,1,1,2147483645,2,0,131,2,0, · · update seg$ set type#=:4,blocks=:5,extents

2023-10-26T14:50:46.269629 7871 7330 7871 · EXEC(,070015001B0500,"ACTIVE","UPDATE" · INSERT INTO "SYS_RESERVJRNL_98174" (ORA_SAGA

2023-10-26T14:50:46.269699 7873 7127 7873 EXEC update ticketsales set capacity = capacity-200

2023-10-26T14:50:51.931299 7881 7127 7873 CLOSE update ticketsales set capacity = capacity-200

The update on TICKETSALES also inititates an INSERT INTO "SYS_RESERVJRNL_98174" to reflect this reservation.

4 - UPDATE in Session B

14:51:11 SESSION_B > update ticketsales set capacity = capacity - 800 where id = 1;

1 row updated.

14:51:11 SESSION_B >

2023-10-26T14:51:11.342290 64 64 0 PARSE update ticketsales set capacity = capacity - 8

2023-10-26T14:51:11.343719 69 69 0 · PARSE · SELECT NVL(((select NVL(sum(CAPACITY_RESERVE

2023-10-26T14:51:11.346632 76 76 0 · · PARSE · · SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGN

2023-10-26T14:51:11.349277 91 76 91 · · EXEC(070015001B0500,1) · · SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGN

2023-10-26T14:51:11.351477 109 101 109 · · EXEC(1) · · SELECT /* OPT_DYN_SAMP */ /*+ ALL_ROWS IGN

2023-10-26T14:51:11.352846 129 69 129 · EXEC(0A000B00150500,1,) · SELECT NVL(((select NVL(sum(CAPACITY_RESERVE

2023-10-26T14:51:11.353405 177 140 177 · EXEC(,0A000B00150500,"ACTIVE","UPDATE" · INSERT INTO "SYS_RESERVJRNL_98174" (ORA_SAGA

2023-10-26T14:51:11.353495 179 64 179 EXEC update ticketsales set capacity = capacity - 8

2023-10-26T14:51:11.641520 184 64 179 CLOSE update ticketsales set capacity = capacity - 8

This shows the same pattern: the update is reflected as an

INSERT INTO "SYS_RESERVJRNL_98174".

5 - SELECT in Session B

14:51:11 SESSION_B > select capacity from ticketsales where id = 1

2 -- 2000

3* ;

CAPACITY

___________

2000

14:51:12 SESSION_B >

14:51:12 SESSION_B >

14:51:12 SESSION_B >

14:51:12 SESSION_B >

14:51:12 SESSION_B > select xid from v$transaction where addr=(select taddr from v$session where sid= SYS_CONTEXT ('USERENV', 'SID') ) ;

XID

___________________

0A000B0015050000

14:51:22 SESSION_B > select * from SYS_RESERVJRNL_98174;

ORA_SAGA_ID$ ORA_TXN_ID$ ORA_STATUS$ ORA_STMT_TYPE$ ID CAPACITY_OP CAPACITY_RESERVED

_______________ ___________________ _______________ ___________________ _____ ______________ ____________________

0A000B0015050000 ACTIVE UPDATE 1 - 800

2023-10-26T14:51:11.644507 201 201 0 PARSE select capacity from ticketsales where id = 1

2023-10-26T14:51:11.644574 202 201 202 EXEC select capacity from ticketsales where id = 1

2023-10-26T14:51:11.644648 204 201 202 FETCH select capacity from ticketsales where id = 1

2023-10-26T14:51:11.645034 208 201 202 FETCH select capacity from ticketsales where id = 1

2023-10-26T14:51:21.707923 213 201 202 CLOSE select capacity from ticketsales where id = 1

On this layer, each SEssion sees only its own transaction in

SYS_RESERVJRNL_98174.

6 - UPDATE in Session C

14:51:54 SESSION_C > update ticketsales

2* set capacity = capacity - 500 where id = 1;

1 row updated.

14:51:55 SESSION_C > select xid from v$transaction where addr=(select taddr from v$session where sid= SYS_CONTEXT ('USERENV', 'SID') ) ;

XID

___________________

0400010033050000

14:52:02 SESSION_C >

14:52:02 SESSION_C > select * from SYS_RESERVJRNL_98174;

ORA_SAGA_ID$ ORA_TXN_ID$ ORA_STATUS$ ORA_STMT_TYPE$ ID CAPACITY_OP CAPACITY_RESERVED

_______________ ___________________ _______________ ___________________ _____ ______________ ____________________

0400010033050000 ACTIVE UPDATE 1 - 500

14:52:10 SESSION_C >

14:52:10 SESSION_C > select capacity from ticketsales where id = 1

2* ;

CAPACITY

___________

2000

14:52:24 SESSION_C >

2023-10-26T14:51:55.202602 67 67 0 PARSE update ticketsales set capacity = capacity - 5

2023-10-26T14:51:55.203106 87 72 87 · EXEC(04000100330500,1,) · SELECT NVL(((select NVL(sum(CAPACITY_RESERVE

2023-10-26T14:51:55.203951 135 98 135 · EXEC(,04000100330500,"ACTIVE","UPDATE" · INSERT INTO "SYS_RESERVJRNL_98174" (ORA_SAGA

2023-10-26T14:51:55.204026 137 67 137 EXEC update ticketsales set capacity = capacity - 5

2023-10-26T14:52:02.051276 144 67 137 CLOSE update ticketsales set capacity = capacity - 5

7 - 2nd UPDATE in Session C

14:52:24 SESSION_C > update ticketsales

2 set capacity = capacity - 700 where id = 1

3 -- ORA-2290

4* ;

Error starting at line : 1 in command -

update ticketsales

set capacity = capacity - 700 where id = 1

-- ORA-2290;

Error report -

ORA-02290: check constraint (BERX.MINIMUM_CAPACITY) violated

14:52:33 SESSION_C >

2023-10-26T14:52:33.244339 239 239 0 PARSE update ticketsales set capacity = capacity - 7

2023-10-26T14:52:33.244482 254 72 254 · EXEC(04000100330500,1,) · SELECT NVL(((select NVL(sum(CAPACITY_RESERVE

2023-10-26T14:52:33.245893 268 260 268 · EXEC(8245) · select o.name, c.name, decode(bitand(cd.defe

2023-10-26T14:52:33.246097 279 239 279 EXEC update ticketsales set capacity = capacity - 7

280 239 279 ERROR update ticketsales set capacity = capacity - 7

2023-10-26T14:52:33.255272 287 239 279 CLOSE update ticketsales set capacity = capacity - 7

This 2nd update fails - as in trace line 280:

ERROR #140437751612752:err=2290 tim=6674195429

with error code 2290.

For me it's not visible how Lock Free Reservations knows about all the "others" reservations, as Session C only see it's own reservation.

8 - COMMIT in Session A

14:53:01 SESSION_A > commit;

Commit complete.

14:53:01 SESSION_A >

2023-10-26T14:53:01.129023 7942 7942 0 PARSE commit

2023-10-26T14:53:01.129045 7943 0 0 XCTEND #():C:\Users\berx\Downloads\FREE_ora_53177_SES

9 - ROLLBACK in Session B

14:53:30 SESSION_B > rollback;

Rollback complete.

14:53:30 SESSION_B >

14:53:30 SESSION_B > select capacity from ticketsales where id = 1

2 -- 1800

3* ;

CAPACITY

___________

1800

14:53:30 SESSION_B >

2023-10-26T14:53:29.985587 263 0 0 XCTEND #():C:\Users\berx\Downloads\FREE_ora_53652_SES

2023-10-26T14:53:30.212122 269 269 0 · PARSE · select condition from cdef$ where rowid=:1

2023-10-26T14:53:30.212606 277 269 277 · EXEC(00008151.001C.0001) · select condition from cdef$ where rowid=:1

2023-10-26T14:53:30.212672 278 269 277 · FETCH(00008151.001C.0001) · select condition from cdef$ where rowid=:1

2023-10-26T14:53:30.212716 279 269 277 · CLOSE(00008151.001C.0001) · select condition from cdef$ where rowid=:1

2023-10-26T14:53:30.214397 285 285 0 PARSE select capacity from ticketsales where id = 1

2023-10-26T14:53:30.214519 286 285 286 EXEC select capacity from ticketsales where id = 1

10 - UPDATE in Session C

14:53:50 SESSION_C > update ticketsales

2 set capacity = capacity - 700 where id = 1

3* ;

1 row updated.

14:53:50 SESSION_C >

14:53:50 SESSION_C > commit;

Commit complete.

14:53:50 SESSION_C >

14:53:50 SESSION_C > select capacity from ticketsales where id = 1

2 -- 600

3* ;

CAPACITY

___________

600

14:53:51 SESSION_C >

2023-10-26T14:53:50.227511 470 470 0 PARSE update ticketsales set capacity = capacity - 7

2023-10-26T14:53:50.227762 485 72 485 · EXEC(04000100330500,1,) · SELECT NVL(((select NVL(sum(CAPACITY_RESERVE

2023-10-26T14:53:50.228238 523 98 523 · EXEC(,04000100330500,"ACTIVE","UPDATE" · INSERT INTO "SYS_RESERVJRNL_98174" (ORA_SAGA

2023-10-26T14:53:50.228267 524 470 524 EXEC update ticketsales set capacity = capacity - 7

2023-10-26T14:53:50.473869 536 536 0 PARSE commit

2023-10-26T14:53:50.473927 537 0 0 XCTEND #():C:\Users\berx\Downloads\FREE_ora_53693_SES

2023-10-26T14:53:50.474444 550 542 550 · EXEC(04000100330500) · update (select B$.ID,B$.CAPACITY,ORA_ESCR_AG

2023-10-26T14:53:50.474984 571 563 571 · EXEC(04000100330500) · delete from BERX.SYS_RESERVJRNL_98174 where

2023-10-26T14:53:50.475307 574 536 574 EXEC commit

2023-10-26T14:53:51.270715 578 536 574 CLOSE commit

2023-10-26T14:53:51.272280 587 579 587 · EXEC(00008151.001C.0001) · select condition from cdef$ where rowid=:1

2023-10-26T14:53:51.273447 595 595 0 PARSE select capacity from ticketsales where id = 1

2023-10-26T14:53:51.273513 596 595 596 EXEC select capacity from ticketsales where id = 1

A lot is going on behind the scene for making Lock Free Reservations work.

Not all details are clear to me yet, at least I should have given some insights about one method to investigate such questions.